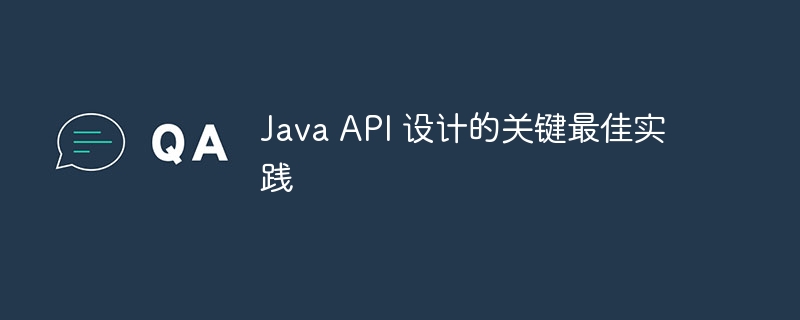

java 框架为高效流式处理提供了支持,包括:apache kafka(高吞吐率、低延迟的消息队列)apache storm(并行处理、高容错的实时计算框架)apache flink(统一的流和批处理框架,支持低延迟和状态管理)

Java 框架处理流式处理

流式处理涉及实时处理不断流入的大量数据,这对于构建实时分析、监控和事件驱动的应用程序至关重要。Java 框架为高效处理流式数据提供了以下功能:

1. Apache Kafka

立即学习“Java免费学习笔记(深入)”;

Apache Kafka 是一个分布式消息队列框架,用于在高吞吐率和低延迟的情况下存储和处理流数据。它提供了:

- 数据分区

- 负载平衡

- 容错能力

代码示例:

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

public class KafkaConsumerExample {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "test-group");

Consumer<String, String> consumer = new KafkaConsumer<>(properties);

consumer.subscribe(Arrays.asList("test-topic"));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(1000);

for (ConsumerRecord<String, String> record : records) {

System.out.printf("Received key: %s, value: %sn", record.key(), record.value());

}

}

}

}2. Apache Storm

Apache Storm 是一个分布式实时计算框架,用于处理大规模、低延迟的数据流。它提供:

- 并行处理

- 高容错能力

- 可扩展性

代码示例:

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

public class StormTopologyExample {

public static void main(String[] args) throws Exception {

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("spout", new WordSpout(), 1);

builder.setBolt("count-bolt", new WordCountBolt(), 1)

.shuffleGrouping("spout");

Config config = new Config();

config.setDebug(true);

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("test-topology", config, builder.createTopology());

Thread.sleep(10000);

cluster.shutdown();

}

public static class WordSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

private String[] words = {"hello", "world", "this", "is", "a", "test"};

@Override

public void open(Map<String, Object> conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

public void nextTuple() {

for (String word : words) {

collector.emit(new Values(word));

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

public static class WordCountBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map<String, Object> conf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

Integer count = tuple.getIntegerByField("count");

collector.emit(new Values(word, count + 1));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

}

}3. Apache Flink

Apache Flink 是一个统一的流和批处理框架,支持实时应用的构建。它提供:

- 低延迟

- 高吞吐率

- 状态管理

代码示例:

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<String> dataStream = env.socketTextStream("localhost", 9000);

dataStream.flatMap(value -> Arrays.asList(value.split(" "))).filter(word -> !word.isEmpty())

.countWindowAll(10).sum(1).print();

env.execute();

}

}通过使用这些框架,Java 开发人员可以构建高效且可扩展的流式处理应用程序,以实时响应大数据流。

以上就是java框架如何处理流式处理?的详细内容,更多请关注php中文网其它相关文章!

版权声明:本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系 yyfuon@163.com